January 2022, Vol. 249, No. 1

Features

Artificial Intelligence and Pipelines – A Smart Way to Go

By Richard Nemec, Contributing Editor

Today’s digital world defines so much of the global society from finances to socialization, education to work and play. It is no surprise that the energy sector, too, is increasingly digitized and data-driven. Step into any pipeline control room or compressor station and it becomes quite clear. And one of the tools increasingly being applied is artificial intelligence, or AI.

Industry researchers consider AI as the most important general-purpose technology of today as it rapidly enters industries, creating significant potential for innovations and growth in health care, transportation, retail, media, and finance, AI already triggered substantial changes and transformed the rules of competition.

Instead of relying on traditional and human-centered business processes, companies have created added value using AI solutions. Advanced algorithms trained on large and useful data sets, and continuously supplied with new data, drive the value creation process.

Oil, natural gas, mining, and construction companies are the latecomers to digitalization, but they are also getting increasingly dependent on AI solutions. Although the first applications of AI in the oil and gas industry were considered in the 1970s, the industry only more recently has started to look more proactively for AI application opportunities

AI leverages computers and machines to mimic the problem-solving and decision-making capabilities of the human mind, according to a description by IBM engineers.

They also note a 2004 definition by the late American computer science pioneer John McCarthy calling AI “the science and engineering of making intelligent machines, especially intelligent computer programs. It is related to the similar task of using computers to understand human intelligence, but AI does not have to confine itself to methods that are biologically observable.”

Known as the father of AI for work he penned in the 1950s, McCarthy is remembered among other AI scholars along with the late Alan Turing, and more recently Stuart Russell and Peter Norvig, the authors of “Artificial Intelligence: A Modern Approach.”

It has become one of the leading textbooks in the study of AI. They outline four potential goals or definitions of AI, which they think “differentiates computer systems on the basis of rationality and thinking vs. acting.”

Turing, often referred to as the “father of computer science,” asked, “Can machines think?” From there, he offered a test, now widely known as the “Turing Test,” where a human interrogator tries to distinguish between a computer and human text response.

While this test has undergone much scrutiny since its publication many years ago, it remains an important part of the history of AI as well as an ongoing philosophical concept as it uses ideas around linguistics, according to a description by IBM scientists.

In the energy sector, AI often comes into play as either machine learning or data science applications. A variety of applications and technologies involving AI are permeating the energy industry and particularly the oil and gas pipeline sector.

“As AI becomes more popular and more data becomes available, results from its use can vary greatly,” said Shannon Katcher, executive director for digitalization and data at the Gas Technology Institute (GTI).

The variance is related to the quality and age of the data; often it might be old or incomplete. Katcher notes that AI is a tool that relies on quality information and a high degree of knowledge among the people who use it.

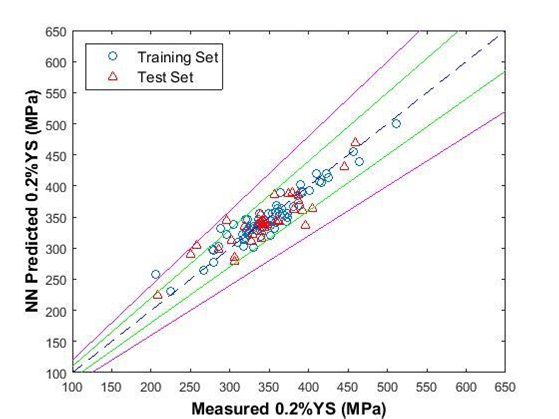

The Pipeline Research Council International (PRCI) underscores that AI is applicable to many aspects of the pipeline industry, especially where patterns are hard to see or when there are very large data sets to deal with. Ongoing PRCI research has used AI to:

- estimate pipe material strength and durability based on the chemical composition

- estimate expected engine and compressor operating parameters to compare against the actual parameters to identify decline in equipment performance and efficiency

- estimate engine emissions

- detect potential threats on the pipeline right-of-way using digital processing of satellite images.

More broadly, the combination of the pressures of fallout from climate change and the continuing shale revolution have sparked a renaissance for AI-enabled monitoring of energy operations and equipment that is underscored in the emergence of firms like the Colorado-based public benefits company Canary Project, an independent certification organization that measures, tracks, and delivers environmental-societal-governmental (ESG) data across the energy value chain.

Industry observers call Project Canary a leader in the rating and certification of “responsible energy operating practices, and a provider of science and technology-backed emission profiles via continuous monitoring hardware synced with a real-time dashboard.”

Its products include upstream (TrustWell) certifications, midstream certifications, and continuous monitoring to help identify the most responsible energy supply chain operators. Formed as a public benefit corporation, Project Canary operates with a team of scientists, engineers, and seasoned industry operators.

The chatter these days on the internet, at conferences and in reports is talking evermore about technology and what it promises in the oil/natural gas space. There are skeptics and advocates alike, and there is no shortage of points of view. A 2020 Barclays report, “Frack to the Future; Oil’s Digital Rebirth,” said technology is going to ramp up efficiency throughout the industry. “Digital technologies will reduce the cost of production by almost 10% globally [over the next five years] while increasing [oil/gas] recovery rates by the same amount.”

While calling the projections “deflationary” for the oil services sector, the Barclays report called upstream digital advances a potential $30 billion market, six times its size in 2020.

American Petroleum Institute (API) blogger Sam Winstel has dipped into the technology discussions, citing other authors such as Mark Mills, a senior fellow at the conservative Manhattan Institute, and Ann Meyers Jaffe, professor and director of the climate policy lab at the Tufts University Fletcher Lab in Medford, MA, who’s recent book is “Energy’s Digital Future.”

“When the markets rebalance, natural gas and oil producers will likely develop technologies to meet the renewed growth in energy demand,” Winstel wrote last year. “While the competitive landscape may change, rising commodity prices combined with digital efficiencies will unlock opportunities for American energy companies and their investors, and according to Mills, this could ‘ignite another shale super-cycle in U.S. production’.”

In November, Virginia-based PRCI was focused on several areas related to AI use in pipelines, according to Gary Choquette, executive director for research and IT.

Those include: (1) Improving the reliability, detection, and accuracy capabilities of existing leak detection systems using machine learning as part of a project co-funded by U.S. Pipeline and Hazardous Materials Safety Administration (PHMSA); (2) Knowledge-guided automation for integrity management of aging pipelines for hydrogen transport as part of a PHMSA and Competitive Academic Agreement Program (CAAP) project in which PRCI will be providing an oversight role; and (3) PRCI also has an oversight role with Pacific Northwest National Laboratories which is working on machine learning methods to detect pipe anomalies using inline inspection data.

Choquette notes that AI in the oil and gas market is highly competitive, consisting of several major players. “In terms of market share, few of the major players currently dominate the market,” he said. “The companies are continuously capitalizing on acquisitions, in order to broaden, complement, and enhance their product and service offerings, to add new customers and certified personnel, and to help expand sales channels.”

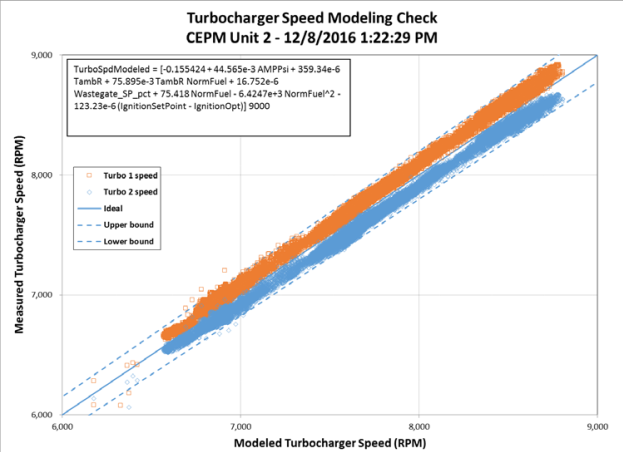

PRCI in conjunction with engineering researchers at Colorado State University has examined the growing computerization of large engine controls. Computers have become a vital part of the control of pipeline compressors and compressor stations. For many tasks, computers have helped to improve accuracy, reliability, and safety, and have reduced operating costs.

Drawing on computers’ excellent track record in completing repetitive, precise tasks that humans perform poorly, they now handle calculation, measurement, statistical analysis, control, etc., for pipeline engines.

The PRCI work has examined how computers are used for precise tasks at compressor stations, such as engine/turbine speed control, ignition control, horsepower estimation, or control of complicated sequences of events during startup and/or shutdown periods.

For PRCI, the principal areas of AI impact are in quality assurance, safety, reduced costs, better decision making on analytics, and new insights for exploration and production (E&P), according to Choquette, but he excludes the popular development of voice chatbots. “To my knowledge, no one is using voice chatbots,” he said. “It is possible someone is working with it, but it is not something we at PRCI have had any focus on.

“A lot of the emphasis at PRCI is on building technologies that allow operators to identify more real time and reliability issues when you have mass amounts of data,” he said, citing the example: of using satellite imagery to look at pipeline right-of-way for objects that should not be there, such as a backhoe in an area where an operator has no current construction.

“Now you have a threat of third-party damage, but to hire people to identify the issue you would have to have people looking at thousands and thousands of images every day where as you can automatically download those images from a satellite and have a computer look for patterns that look like threats and then have an individual review those potential threats,” Choquette said.

Another way that PRCI commonly uses AI is for building “digital twins,” coming up with a mathematical model to predict how an element would perform in the real world. But AI systems need some human help to get good results.

“In many cases, we know the basic physics and the associated mathematics as we develop the model,” Choquette said, noting that feeding the models reduced data rather than raw data can produce better results.

For example, on a compressor twin to estimate expected efficiency, PRCI provides the compression ratio data rather than the suction and discharge pressures used to calculate the ratio. “Properly constructed, AI models will find nuances that would be hard to identify with the manual eye, especially for very large data sets.”

At GTI in the Chicago suburb of Des Plaines, Illinois, work on excavation damage has resulted in a new system now in the marketplace.

“Each type of equipment has its own signature based on a number of sensors in the cab that identify when the equipment is moving, digging, or performing any other activity in the field. Combining that data with location data will send real-time alerts when it may be digging too close to a pipeline. That is one of the things we used AI to do and its already commercially available through the software and field services company, Hydromax USA,” Katcher said.

AI also has been used by GTI to build “mesh structure networks” to link field sensors that can find and pinpoint pipeline leaks. Similarly, if there are disturbances or unwanted equipment in rights-of-ways, stationary sensors are able to detect the threats and communicate specific messages regarding the disturbance based on trained data sets.

GTI is involved in “Project Astra,” a global collaborative effort at the forefront of methane detection aimed at reducing emissions. The team is developing a high-frequency smart monitoring system to detect methane leaks on oil and gas wellpads.

“The goal is to optimize placement of methane sensors, use advanced modeling to identify sites with leaks, and feed data to a central platform that can quickly notify field response crews to speed repair,” Katcher said.

This automated monitoring will decrease frequency of in-person inspections and allow them to be performed remotely to provide an affordable efficient solution, she said.

The project led by the University of Texas at Austin includes Chevron, the Environmental Defense Fund, ExxonMobil, Microsoft, Pioneer Natural Resources Co., and Schlumberger, in addition to GTI, aiming to demonstrate a new approach using advanced technologies to reduce total methane emissions.

Katcher thinks GTI’s most exciting current project is a joint opportunity with the National Renewable Energy Laboratory (NREL) on developing a hydrogen senor network, combining algorithms on vibration and other sensor technologies.

This project is deploying sensors to detect and locate hydrogen leaks using digital twin models, she said. In this project, a simulated model of the pilot area will first determine sensor placement, and the data being used – trained – to identify when leaks when leaks occur in the virtual environment first, so the model can identify leaks happening in real life.

“We’re doing a lot of work in building out digital solutions,” Katcher said. “We want to be able to better enable transitions since we are heading into a period where it is not clear what future energy systems will look like. We want to determine how we can make different technologies interoperable, so we are developing various digital twins and simulations to assess what’s going to happen as we begin to integrate parts of our energy systems that have not previously been used together.”

Both government and industry have a similar challenge to marshal the expertise in their respective staffs to be able to deal with the wealth of new opportunities for AI and other advanced technologies. This expertise is needed to adequately evaluate the onslaught of proposals coming to the forefront.

Katcher notes that the pipeline space has generated a lot of emerging technologies to identify anomalies and help operators be more proactive in terms of protecting infrastructure.

“Although a lot of old infrastructure has been replaced, there is still a lot out there,” Katcher said. “There will always be support for figuring out ways to extend the lifetime of our infrastructure investments. It is a matter of doing the same things more intelligently, and there is a lot of flexibility on the pipeline side, looking at different robotics, sensors, and scanning technologies; even monitoring ground movement, which can be a lot more complicated.”

Engineers at the think tanks are bullish about the future of AI and the oil and gas sector’s ability to manage more volumes of more complex data, but they also recognize there will be challenges and increasingly regulations will begin to require more articulation of the reasons behind use of particular technologies and their results to create more transparency around AI.

The experts at PRCI, GTI, and other energy research centers note that AI is very broad with different techniques under its rubric. Technology advancements and faster computers have allowed it to be applied to more of a variety of assignments. Going forward, it will continue to be broadened.

Within the pipeline space, there have been a growing number of emerging technologies to identify anomalies. PRCI’s Choquette thinks the biggest impact on pipelines will come from AI’s ability to process large quantities of data that can’t be handled by traditional human interaction. “It is essentially a great filtering tool for mountains of data, so we can identify specific information outside the norm that people with the right expertise need to focus on,” Choquette said.

Currently, there are a lot of specific AI applications to address narrow problems, GTI’s Katcher said, “At GTI we pull together disparate technologies and systems and make them more interoperable?” The sensors and analytics that can assist in identifying risk to aging infrastructure, leak detection, and other areas can be applied to other networks and systems.

The focus has been on machine learning and data science along with operations and maintenance. Katcher thinks that today the investments in AI are “completely unprecedented.” Among government, industry, and venture capital funding, there is a lot of investment in energy, but particularly for various technologies that relate to near-term methane emissions reduction. There is also a lot of interest in finding ways to merge energy systems with the digital world.

“There are a lot more of the open-ended types of opportunities for broad energy system applications with digital tools,” Katcher said. “They’re looking for overarching ways to optimize solutions for greener energy. They still involve natural gas but are exploring the best options to decarbonize pathways.”

At PRCI, a current research project aims to discuss and demonstrate how AI is transforming the oil and gas upstream, seeking to answer three questions:

- What de-risking in the oil and gas industry means and how AI is helping with it?

- Which processes can be accelerated by applying AI and how much?

- What has been already done and what are the expected advancements in the following years?

If meaningful answers eventually are forthcoming, the pipeline sector should be in a stronger position to apply AI in more and better ways to advance efficiency, safety and the bottom line. There is nothing “artificial” about that outcome; it should be as real and tangible as net zero gas supplies.

Richard Nemec is a regular P&GJ contributing editor based in Los Angeles. He can be reached at rnemec@ca.rr.com.

Comments