August 2021, Vol. 248, No. 8

Features

Taking a Quantitative Approach to Benchmarking Your Team

By Karen Collins, Head of Education, Systems and Services, ROSEN USA

Benchmarking is the process of comparing products, services and processes within an organization to an external standard, exploring how and why other organizations achieve higher levels of efficiency. This same methodology can be used to benchmark your pipeline integrity personnel.

The process follows a quantitative approach, first proposed by Antonucci and d’Ovidio, that applies a skill gap analysis to identify the “gaps” of skills and knowledge within an organization. Their algorithm considers “only the situations where a negative gap was found, that means that the competences owned by the worker are lower than the ones needed.”1

However, through a slight modification, we are not only able to identify those individuals with deficiencies in their skills and knowledge but also those individuals who exceed minimum requirements.

The gap analysis encompasses two key elements: the identification of the competencies to be analyzed and an external benchmark to compare the assessed personnel’s skill and knowledge.

Antonucci and d’Ovidio took a more formal approach to assessing personnel, but we propose starting first with an informal methodology – a skill self-assessment – before following up with a formal competency assessment.

A skill self-assessment is not a test; there are no right or wrong answers. The goal is to help individuals to know the extent of their ability and improve it without the need of a performance appraiser, empowering them to take control of their own career development.

A skill self-assessment “refers to the involvement of learners in making judgments about their own learning, particularly about their achievements and the outcomes of their learning. Self-assessment is formative in that it contributes to the learning process and assists learners to direct their energies to areas for improvement.”3

Competencies Identification

The role of a pipeline integrity engineer is diverse, and opinions differ on what competencies are required. We tasked a diverse panel of independent subject matter experts (SMEs) in the pipeline industry to identify core competencies essential for all pipeline integrity engineers, along with the associated knowledge and skills.

These requirements are contained in a competency standard.1 The panel agreed there are “eight core competencies essential for every pipeline integrity engineer to possess.”2

- Pipeline engineering principles

- Pipeline inspection and surveillance

- Pipeline integrity management

- Pipeline defect assessment

- Inline inspection (ILI) technologies and procedures

- ILI data analysis and reporting

- Stress analysis

- Fracture mechanics

External Benchmarking

External benchmarking is an important tool for an organization to understand how well the business is functioning and where it could improve. It is equally important that the benchmark for the gap analysis be set externally as well.

The external benchmark assigns a numeric value to minimum requirements of the knowledge and skills or sub-competencies necessary for a pipeline integrity engineer at a set competency level. Our external benchmark was established for the eight core competencies through data derived from a Job Task Analysis validation study, which elicited empirical feedback from incumbent practitioners.2

The respondents were first asked to rate what percentage of the pipeline integrity engineer’s job role was related to each identified competency, with the sum of the eight competencies equaling 100%. Next, they were asked to rate the knowledge/skills or sub-competencies for each of the eight competencies on their importance to the role of a foundation-level3 pipeline integrity engineer using a five-point-rating Likert scale, expressed as statements ranging from “not at all important” to “very important.”

Although each category is assigned an integer, the benchmark cannot simply be established by averaging the responses. The average of “important” and “very important” is not “important-and-a-half.” Therefore, the external benchmark for each sub-competency was calculated using the measure of consensus.

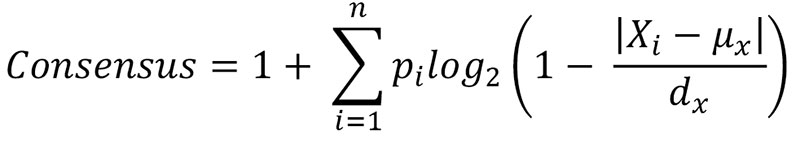

This metric gives the level of agreement as the percentage of consensus among respondents. The equation of the consensus metric is defined as:

“Where X is represented as the Likert scale, pi is the probability of the frequency associated with each X, dx is the width of X, Xi is the particular Likert attribute, and μx is the mean of X.”

First, the benchmark for each sub-competency is calculated. The benchmark for the competency as a whole then is the average of the sub-competencies.

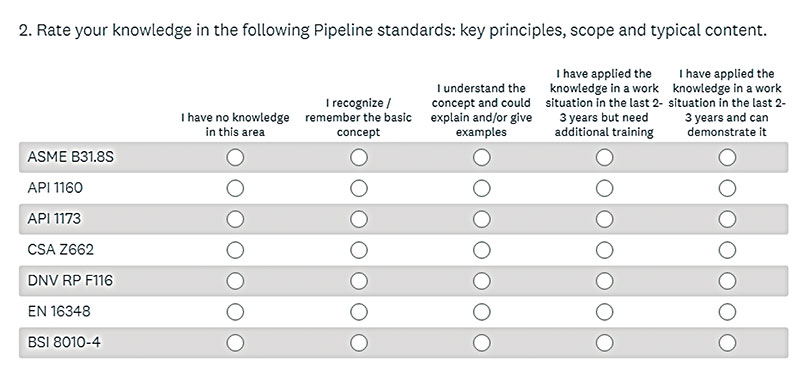

Skill Self-Assessment Survey

The skill self-assessment is presented in a survey format, which mimics the competency standard’s sub-competencies. The same sub-competencies are used for the external benchmarking. In some cases, a sub-competency should be broken down further (standards requirements include ASME B31.8S, API 1160, API 1173, CSA, Z662, DNV RP F116, BSI 8010-4 and EN16348) to a more granular level that gives the individual an opportunity to receive partial credit for those areas in which they may have some knowledge (Figure 1).

The responses are also expressed on a five-point rating Likert scale; however, the integers assigned to each range from “0” to “4,” where an individual with a score of “0” has no knowledge/skills for that sub-competency. The survey also allows individuals to identify areas in which they would like additional training, providing direction for future training. (Figure 1)

The scoring for the individual’s self-assessment is a bit more straightforward. The Likert scale for self-assessment is converted from integers (0, 1, 2, 3 and 4) to scores (0%, 25%, 50%, 75% and 100%). In cases where a sub-competency has been broken down, the respondents’ assigned values are averaged.

For example, if an individual assigns the category “I recognize/remember the basic concept” to a sub-competency, the score will equal 25%, (1 - 0) /4*100.

Competency Assessments

Competency assessments take a more formal approach than a self-assessment; they involve the measurement of an individual’s competency against a competency standard. There are different methods for assessing competence – performance observations, interviews and formal assessment through examination – that all have the same goal: to “identify and fill gaps in individual’s competencies before they contribute to a major incident.”4

Formal written exams have an advantage over interview and performance observations. They provide “a platform that is valid, repeatable and reliable measure of skills and knowledge as defined within the competency assessment.

“It is important to note that the formal exam must follow the assessment blueprint.”2 Additionally, exams are also much more practical. They are easier to administer and manage resources.

Using the data previously gathered in our Job Task Analysis validation study, an “assessment blueprint” was developed. The assessment blueprint links the assessment to the required knowledge and skills, and it provides a weighting within each sub-competency. In short, it determines the percentage of test questions from each sub-competency presented during a formal competency assessment.

For example, in a multiple-choice exam with 40 choices with nine sub-competencies, the questions are disseminated with a greater emphasis on sub-competencies 2, 3, 5 and 9. (Figure 2)

Since the weights are applied through the number of questions, all questions have the same point value. The percentage of the questions obtained correctly determines the overall score on each sub-competency or the exam as a whole.

Gap Analysis

The gap analysis is very straightforward. The algorithm indicates the benchmark assigned to the j-th skill (sub-competency); Pij indicates the level of the skill possessed by the i-th individual; and Nij is the score of necessity or benchmark required by the individual.

Gij=(Nij−Pij)

We can apply the results from the gap analysis to individuals as well as to teams. Consider the following scenarios:

Scenario 1:

Engineer A has three years of experience and has completed his or her skill self-assessment. In three of the sub-competencies, training was requested. Once the training was completed, a formal assessment was given. Engineer A improved in those areas where training was given but still fell below the established benchmark.

The formal assessment reveals management insights regarding how well their personnel can accurately identify their skills and knowledge.

Scenario 2:

Company X employs five pipeline integrity engineers, all of whom have completed a formal assessment in a single competency. The engineers’ overall scores are benchmarked against the required score, as illustrated in the following figure. Utilizing the results, the integrity manager can group personnel according to their strengths – Engineer A with Engineer E, for example, ensuring competency within all individual teams.

Scenario 3:

The training budget has been reduced due to market conditions. The department manager wants to target those competencies that require the most attention to optimize the use of available resources. The pipeline integrity team is formally assessed in eight competencies; based on the scores, the department manager can prioritize training.

Summary

Skill gap analysis is a valuable tool. It gives personnel the opportunity to identify the knowledge and skills needed to advance in their career.

Additionally, the results of a skill gap analysis provide management with indispensable information about the crucial skills and knowledge missing within their teams, empowering them to develop or revise their curricula for educational and training programs as well as to optimize the use of resources.

References

Antonucci, F. and D. d’Ovidio, “An Informative System Based on the Skill Gap Analysis to Planning Training Courses,” Applied Mathematics, Vol. 3 No. 11 (2012), p. 1619-1626; doi: 10.4236/am.2012.311224.

Collins, K., M. Unger, A. Dainis, “How Do I Ensure ‘Staff Competency’ in my Pipeline Safety Management System?” Proceedings of the 2020 13th International Conference IPC2020: Calgary, Alberta, Canada, September 28-30, 2020.

Falchikov, N. D. Boud, “Student Self-Assessment in Higher Education: A Meta-Analysis.” Review of Educational Research, 59 (1989); p. 395-430.

Wright, M., D. Turner, C. Horbury, “A Review of Current Practice. Competence assessment for the hazardous industries,” Crown, Colegate, (2003), p. 23.

Author: Karen Collins is the head of education, systems and services business line at ROSEN USA and is responsible for providing education and competency solutions in the U.S. and Mexico. With over 12 years of experience in pipeline integrity, she is committed to promoting competency development, evaluation and competency standards in the oil and gas industry.

Comments